How LMArena Collaborated with E2B to Build LLM Web Development Evals

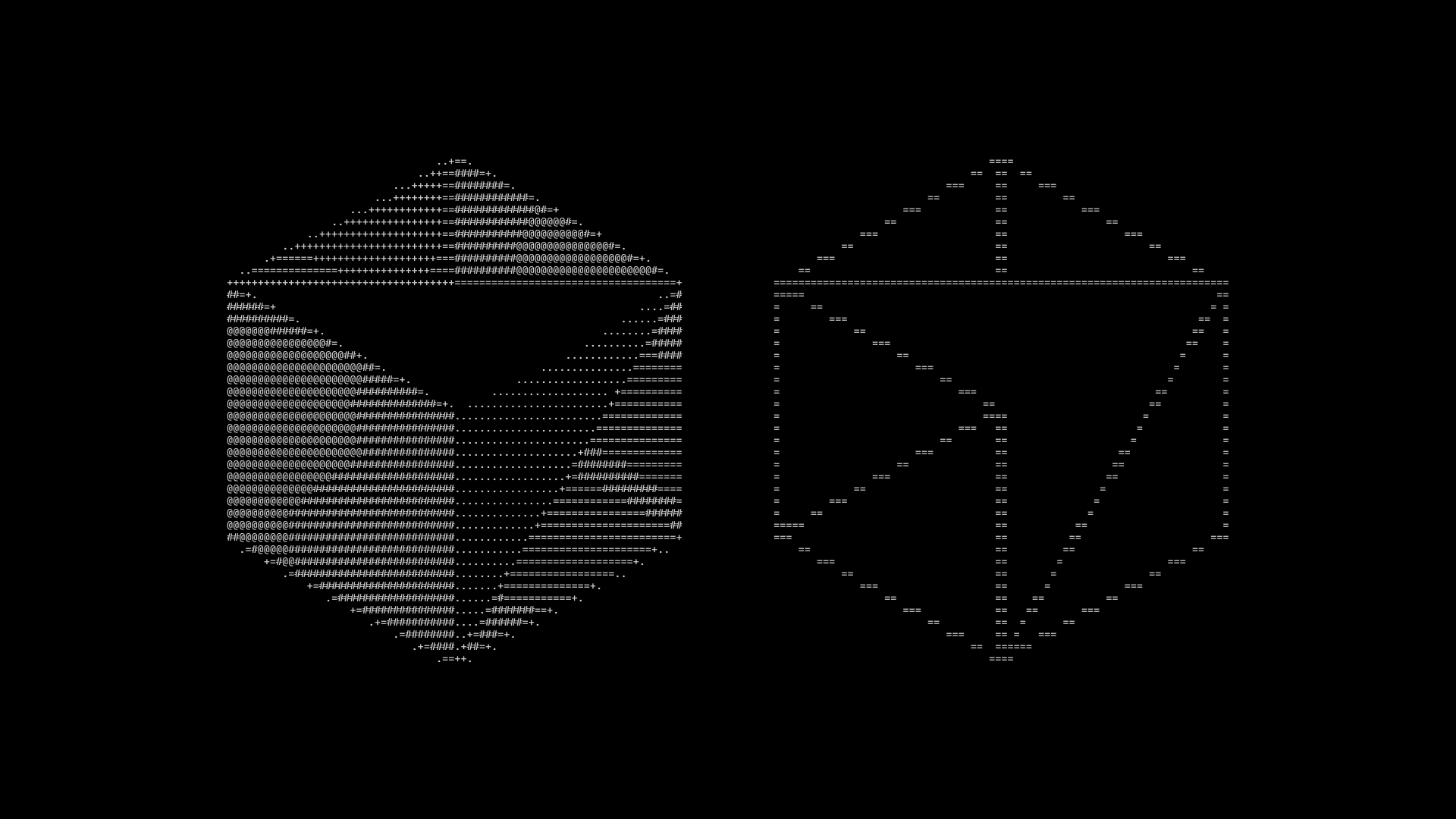

LMArena, a UC Berkeley research team, created WebDev Arena to evaluate the capabilities of large language models (LLMs) in web development. It’s a free open-source arena where two LLMs compete to build a web app.

You can vote on which LLM performs better and view a leaderboard of the best models.

The challenges of LLM coding evals

LMArena faced key technical challenges in testing multiple LLMs building real-time web applications.

When benchmarking LLMs on web development tasks, there's a fundamental need to run the code output. While getting LLMs to generate code is straightforward, running it securely at scale presents several challenges:

Speed: For meaningful comparisons, the evaluator needs to see the two LLMs' outputs nearly simultaneously. When you're comparing output from two or multiple models at once, even small delays compound quickly and distort the results of the voting.

Code execution: Running coding evaluations means handling large amounts of code simultaneously. Each LLM can generate extensive code snippets - from simple DOM manipulations to complex React components. Running these side-by-side requires significant computational resources.

Isolation and security: Each piece of LLM-generated code needs to run in an isolated environment. When you're comparing models like Claude and GPT-4 simultaneously, their code can't interfere with each other or share any execution context. Running LLM-generated code requires complete isolation to prevent any potential system access or interference between different models' executions.

LLM code execution

These challenges led LMArena to implement E2B as their execution environment. Under the hood, each sandbox is a small VM, allowing WebDev Arena to run code from multiple LLMs simultaneously while maintaining strict isolation and performance standards.

Key factors in this decision included:

- Security first - E2B's isolated environments ensure each LLM's code runs separately and securely

- Quick startup - E2B sandboxes start in ~150ms, essential for real-time model comparison

- Reliability at scale - Running multiple sandboxes simultaneously for different LLM battles

The E2B sandboxes provide isolated cloud environments specifically designed for running AI-generated code. E2B is agnostic towards the choice of techstack, frameworks, and models, which lets WebDev Arena run code from different LLMs (e.g., Claude, GPT-4, Gemini, DeepSeek, and Qwen).

“We have over 50,000 users who started over 230,000 E2B sandboxes.”

Implementing E2B

LMArena implemented E2B for WebDev Arena in November 2024. The integration involved working closely with E2B's team to ensure reliable execution of code from multiple LLMs.

The implementation consisted of handling sandbox creation and code execution. Here's how it works:

- Sandbox Configuration and Creation

The team implemented a flexible sandbox system with configurable timeouts and templates

- Dynamic Dependency Management

The system supports dynamic package installation based on LLM requirements:

- Automatic detection of additional dependencies

- Custom installation commands for different environments

- Real-time logging of dependency installation status

- Code Execution Pipeline

The system handles two types of code execution:

- Code Interpreter Mode: For running and evaluating code with detailed output

- Web Development Mode: For deploying and accessing web applications

“It took 2 hours to get E2B up and running.”

What's next

Since the launch of WebDev Arena with E2B's code execution layer, the platform has run over 50,000 model comparisons, with Claude 3.5 Sonnet currently being on top of the leaderboard, followed closely by DeepSeek-R1. The team plans to expand the benchmark with more models and plan to do online general-purpose coding evaluations in the future.

“We plan to expand to more software engineering tasks."

Secure AI Sandbox