At this moment, Llama 3 is one of the most capable open-source models. In this guide, we give Llama 3 code interpreter capabilities and test it on data analysis and data visualization task.

Code Interpreter SDK

We will show how to build a code interpreter with Llama 3 on Groq, and powered by open-source Code Interpreter SDK by E2B. The E2B Code Interpreter SDK quickly creates a secure cloud sandbox powered by Firecracker. Inside this sandbox is a running Jupyter server that the LLM can use.

Key links

Overview

Setup

Configuration and API keys

Creating code interpreter

Calling Llama 3

Connecting Llama 3 and code interpreter

Setup

We will be working in Jupyter notebook. First, we install the E2B code interpreter SDK and Groq's Python SDK.

%pip install groq e2b_code_interpreter

Configuration and API keys

Then we store the Groq and E2B API keys and set the model name for the Llama 3 instance you will use. In the system prompt we define sets the rules for the interaction with Llama.

GROQ_API_KEY = ""

E2B_API_KEY = ""

MODEL_NAME = "llama3-70b-8192"

SYSTEM_PROMPT = """you are a python data scientist. you are given tasks to complete and you run python code to solve them.

- the python code runs in jupyter notebook.

- every time you call `execute_python` tool, the python code is executed in a separate cell. it's okay to multiple calls to `execute_python`.

- display visualizations using matplotlib or any other visualization library directly in the notebook. don't worry about saving the visualizations to a file.

- you have access to the internet and can make api requests.

- you also have access to the filesystem and can read/write files.

- you can install any pip package (if it exists) if you need to but the usual packages for data analysis are already preinstalled.

- you can run any python code you want, everything is running in a secure sandbox environment"""

tools = [

{

"type": "function",

"function": {

"name": "execute_python",

"description": "Execute python code in a Jupyter notebook cell and returns any result, stdout, stderr, display_data, and error.",

"parameters": {

"type": "object",

"properties": {

"code": {

"type": "string",

"description": "The python code to execute in a single cell.",

}

},

"required": ["code"],

},

},

}

]

Creating code interpreter

We define the main function that uses the E2B code interpreter to execute code in a Jupyter Notebook cell. We'll be calling this function a little bit further when we're parsing the Llama's response with tool calls.

def code_interpret(e2b_code_interpreter, code):

print("Running code interpreter...")

exec = e2b_code_interpreter.notebook.exec_cell(

code,

on_stderr=lambda stderr: print("[Code Interpreter]", stderr),

on_stdout=lambda stdout: print("[Code Interpreter]", stdout),

)

if exec.error:

print("[Code Interpreter ERROR]", exec.error)

else:

return exec.results

Calling Llama 3

Now we're going to define and implement chat_with_llama method. In this method, we'll call the LLM with our tools dictionary, parse the output, and call our code_interpret method we defined above.

See the Groq documentation to get started.

import os

import json

import re

from groq import Groq

client = Groq(api_key=GROQ_API_KEY)

def chat_with_llama(e2b_code_interpreter, user_message):

print(f"\n{'='*50}\nUser message: {user_message}\n{'='*50}")

messages = [

{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": user_message}

]

response = client.chat.completions.create(

model=MODEL_NAME,

messages=messages,

tools=tools,

tool_choice="auto",

max_tokens=4096,

)

response_message = response.choices[0].message

tool_calls = response_message.tool_calls

if tool_calls:

for tool_call in tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

if function_name == "execute_python":

code = function_args["code"]

code_interpreter_results = code_interpret(e2b_code_interpreter, code)

return code_interpreter_results

else:

raise Exception(f"Unknown tool {function_name}")

else:

print(f"(No tool call in model's response) {response_message}")

return []

Connecting Llama 3 and code interpreter

Finally, we can instantiate the code interpreter and pass the E2B API key. Then we call the chat_with_llama method with our user message and the code_interpreter instance.

from e2b_code_interpreter import CodeInterpreter

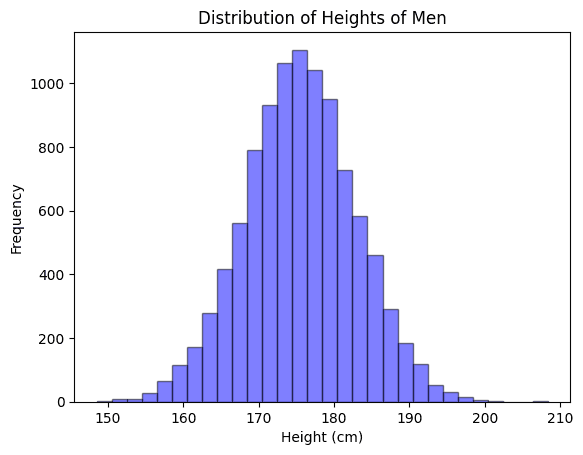

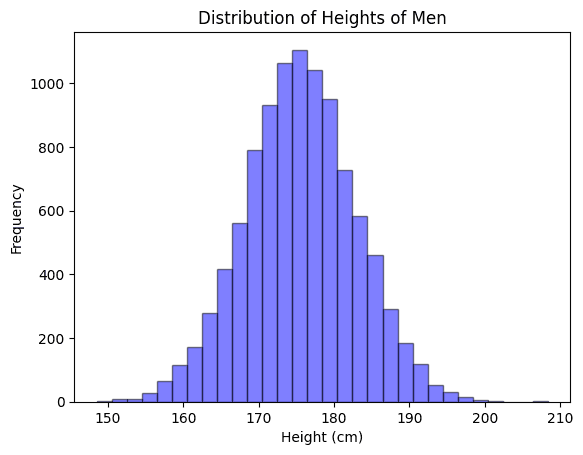

with CodeInterpreter(api_key=E2B_API_KEY) as code_interpreter:

code_results = chat_with_llama(

code_interpreter,

"Visualize a distribution of height of men based on the latest data you know"

)

if code_results:

first_result = code_results[0]

else:

print("No code results")

exit(0)

first_result

Key links